How to Calibrate Sensors with MSA Calibration Anywhere for NVIDIA Isaac Perceptor

Automating Away the Pain of Calibration

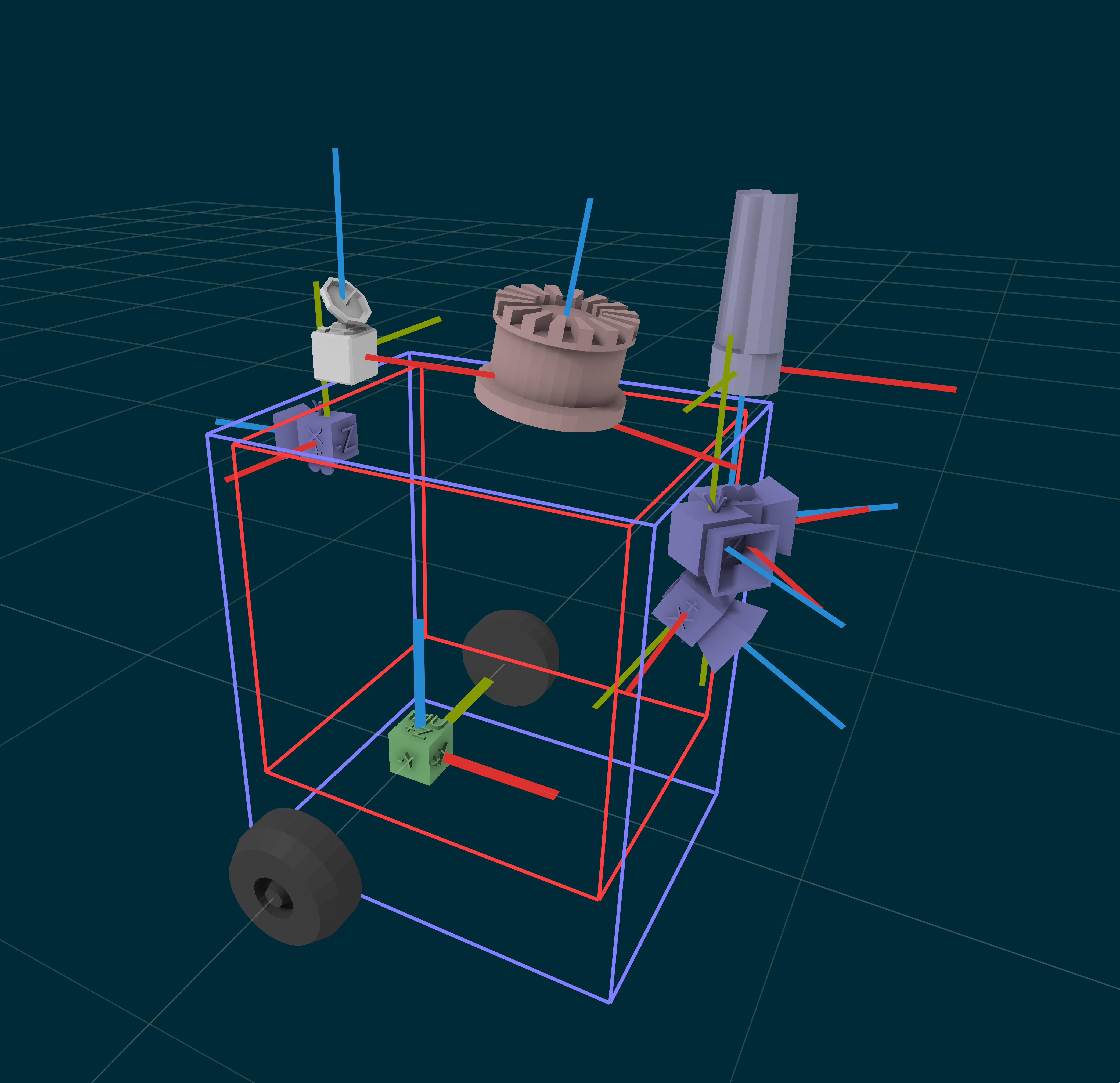

Multi-modal sensor calibration is critical for achieving sensor fusion for robotics, autonomous vehicles, mapping, and other perception-driven applications. Traditional calibration methods, which rely on structured environments with checkerboards or targets, are complex, expensive, time-consuming, and don’t scale. Main Street Autonomy’s Calibration Anywhere software is an automatic sensor calibration solution that simplifies the calibration problem.

Calibration ensures that different perception sensors, like lidar, radar, camera, depth camera, IMU, wheel encoder, and GPS/GNSS, which capture diverse information like range, reflectivity, image, depth, and motion data, generate coherent sensor data streams that perceive the world in agreement with each other. For example, when an autonomous forklift approaches a pallet, a 3D lidar identifies the shape, size, and distance to the pallet and load, and stereo cameras running ML workflows identify the fork openings. With correct calibration, the camera-determined position of the fork openings will align with the lidar-determined outline of the pallet and load. Without correct calibration, sensor data can be misaligned, leading to problems like inaccurate picking, collision with obstacles, and unstable motion.

Traditional sensor calibration is the manual process of determining sensor intrinsics (corrections to sensor data for individual sensors, like lens distortion and focal length for cameras) and sensor extrinsics (positions and orientations relative to each other in a shared coordinate system, which often will be a reference point that relates to the kinematic frame and is used for motion planning and control). The process for calibrating two cameras together is relatively straightforward, requires a printed target called a checkerboard, and can take an hour for an engineer to complete. Calibrating more cameras, or cameras vs. lidar, or cameras vs. IMU, or lidar vs. IMU, are all incrementally more difficult and require additional targets and engineering effort.

Main Street Autonomy’s Calibration Anywhere software is an automatic sensor calibration solution that works with any number, combination, and layout of perception sensors in any unstructured environment. No checkerboards or targets are required, and the calibration can be performed almost anywhere with no setup or environmental changes. The calibration process is fast and can take less than 10 minutes. No engineers or technicians are required. Calibration Anywhere generates sensor intrinsics, extrinsics, and time offsets for all perception sensors in one pass.

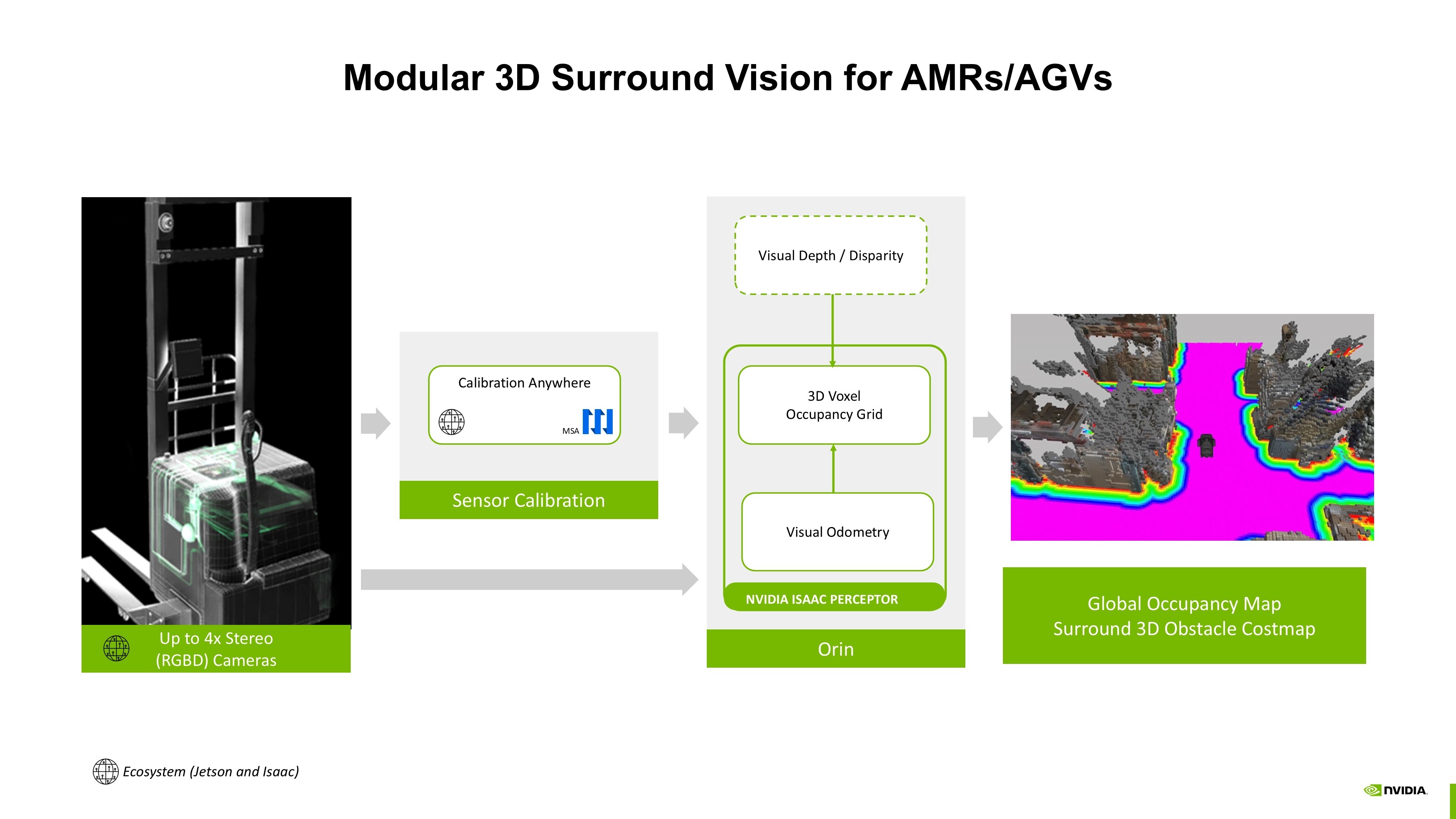

This tutorial is designed for engineers responsible for sensor calibration or those working with perception systems. In this post, you'll learn how to use the Calibration Anywhere solution to generate a calibration file that can be integrated into NVIDIA's Isaac Perceptor workflows.

Prerequisites

For the fastest turn-around time for the first calibration, an ideal configuration is:

-

Environment includes:

- Nearby textured static structure (nothing special, an office environment or loading dock or parking lot are fine, but calibrating cameras that are pointed at the ocean is complicated)

-

Sensor system includes:

-

One of…

- A 3D lidar

- A 2D lidar

- A stereo camera with known baseline

- An IMU

-

One of…

-

If a 3D lidar is present

- FOV of cameras should overlap at least 50% with FOV of lidar

- Depth cameras should be able to see parts of the world that 3D lidar can see (overlap is not required, but objects that the lidar sees should be visible to the depth cameras once the robot has moved around.)

- All sensors are rigidly connected during the calibration

- Sensors are moved manually, via teleoperation, or autonomously in a manner that doesn’t cause excessive wheel slip or motion blur

- Sensors are moved in two figure-8 movements where the individual circles don’t overlap and the diameter of the circles is >1m

- Sensors should approach textured static structure within 1m, where the structure fills most of the FOV of each camera

- Recording length is relatively short – aim for a 60s data collect, and no more than 5 minutes

Sensor systems that don’t meet the above requirements can still be calibrated – it will just have a longer turn-around time. Sensor data in non-ROS formats require transformation and will have a longer turn-around time. Alternate movement procedures are possible for large or motion-constrained robots. Contact MSA for more information!

Procedure

The process of evaluating Calibration Anywhere is straightforward:

- Connect with MSA and describe your system.

- Capture sensor data while the sensor system moves.

- Upload the sensor data to MSA’s Data Portal.

- Receive a calibration package with Isaac Perceptor-compatible URDF output.

- Import the URDF into the Isaac Perceptor workflow.

Once MSA has configured Calibration Anywhere for your system, you can use the calibration-as-a-service solution, where you upload sensor data and download a calibration, or you can deploy Calibration Anywhere in a Docker container and run locally without sending data.

Watch how easy this is here: https://www.youtube.com/watch?v=LJOyHRnkW90

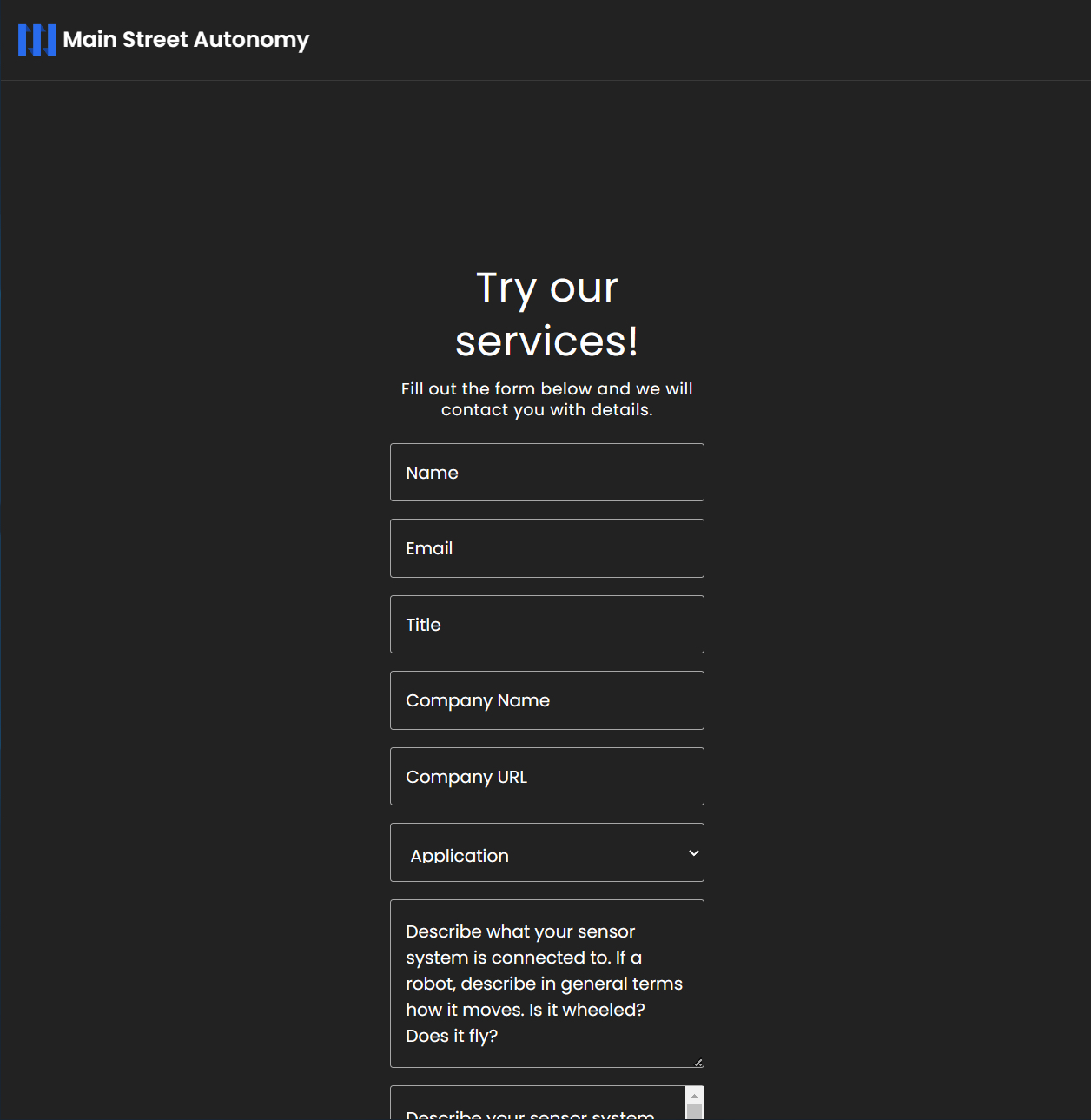

Step 1 - Connect with MSA and describe your system

Go to https://mainstreetautonomy.com/demo and fill out the form. MSA will contact you with any additional questions and send you credentials for using the MSA Data Portal.

Step 2 - Capture sensor data while the sensors move

Move the sensor system and capture sensor data as described above. Multiple (split) ROS bags are ok, but please ensure continuous recording.

Data quality is crucial for calibration success! Please ensure:

- Data does not contain gaps or drops – check that compute, network, and disk buffers are not overrun and that data isn’t being lost during the bagging process

- Topics and messages are present – check that topics are present for all the sensors you expect to calibrate

- Timestamps are included and represent time of capture – we require per-point timestamps for 3D lidar and populated timestamps for all other sensor data

Step 3 - Upload the sensor data to MSA’s Data Portal

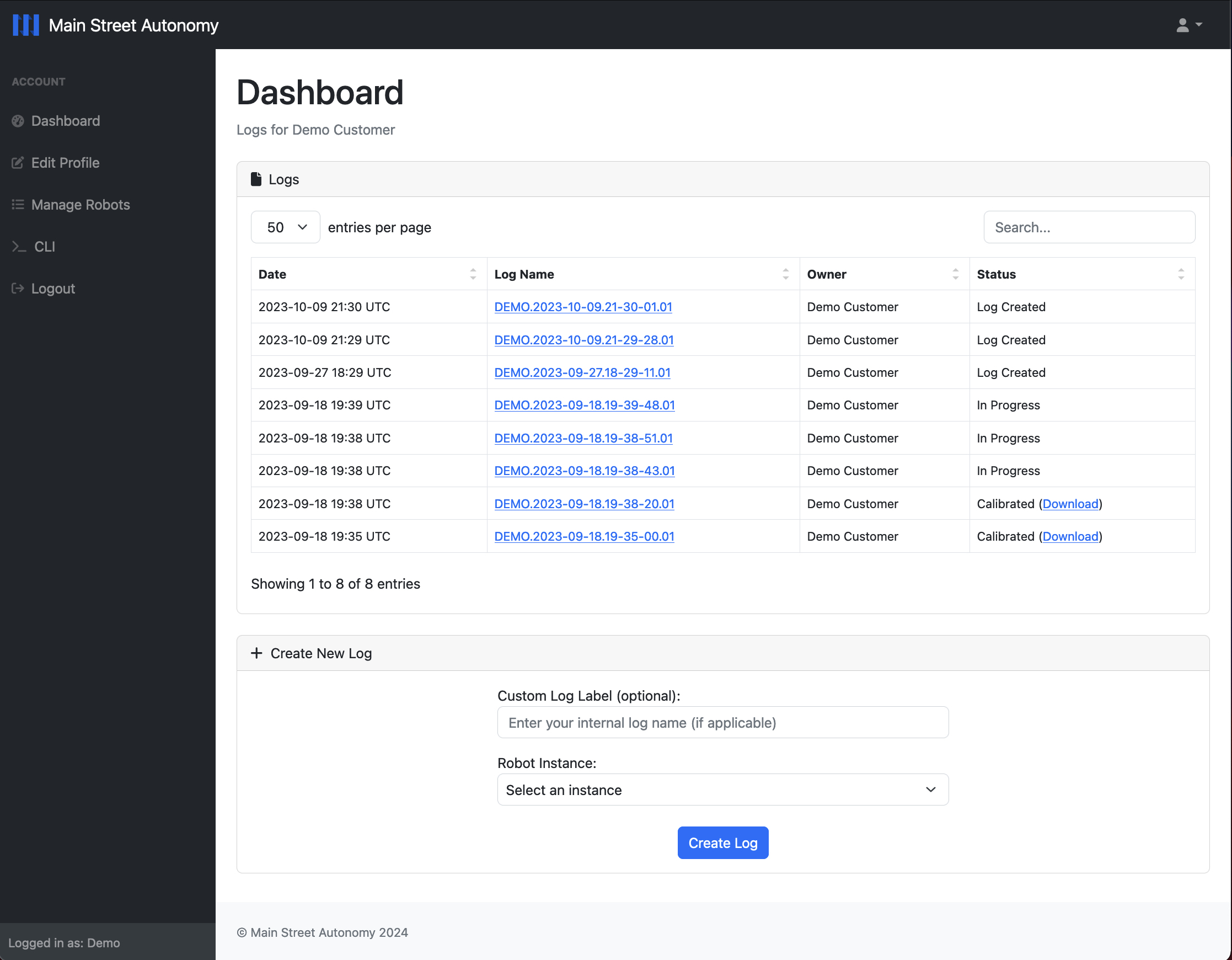

Go to https://upload.mainstreetautonomy.com and authenticate with your MSA-provided credentials. Click the “Manage Robots” button and create a Platform (which is a specific arrangement of sensors, which might be something like ‘DeliveryBotGen5’) and an Instance (which is a specific robot belonging to the Platform, which might be something like ’12’ or ‘Mocha’ if you use names). From the “Dashboard” page, enter a label for your sensor data, select the Robot Instance the data was collected from, and upload your sensor data.

Step 4 - Receive a calibration package with Isaac Perceptor-compatible URDF output

MSA will use the Calibration Anywhere solution to calibrate the sensors used to capture the sensor data. This process can take a few days or longer for complicated setups. When complete, the calibration will be available for download from the Data Portal, as shown in Figure 1. A notification email will be sent to the user who uploaded the data.

The calibration output includes the following:

- extrinsics.urdf – NVIDIA Isaac Perceptor compatible URDF format

-

extrinsics.yaml – sensor extrinsics in a TF-friendly format

- Includes position [x,y,z] and quaternion [x,y,z,w] transforms between the reference point and the 6DoF pose of cameras, 3D lidars, imaging radars, and IMUs, the 3DoF pose of 2D lidars, and the 3D position of GPS/GNSS units

-

wheels_cal.yaml – sensor extrinsics

- Includes axle track estimate (in meters)

- Includes corrective gain factors for left and right drive wheel speed (or meters-per-tick)

-

<sensor_name>.intrinsics.yaml – sensor intrinsics:

-

Includes OpenCV-compatible intrinsics for each imaging sensor: a model that includes a projection matrix and a distortion model

- Supporting equidistant (fisheye), ftheta3, rational polynomial, and plumbob models

- Includes readout time for rolling shutter cameras

-

Includes OpenCV-compatible intrinsics for each imaging sensor: a model that includes a projection matrix and a distortion model

-

ground.yaml – ground detection:

- Includes ground plane relative to sensors, for robots that move on a flat floor

-

time_offsets.yaml – timestamp corrections:

- Includes relative time offsets for cameras, lidars, radars, IMUs, wheel encoders, and GPS/GNSS units

Step 5 - Import the URDF into Isaac Perceptor workflows

Copy the extrinsics.urdf file to /etc/nova/calibration/isaac_calibration.urdf. This is the default URDF path used by NVIDIA Isaac Perceptor. Figure 4 shows the workflow.

Conclusion

Calibrating sensors using MSA's Calibration Anywhere and integrating the results with NVIDIA Isaac Perceptor workflows requires careful attention to sensor setup and data collection. Ensuring that the sensor system meets the prerequisites described above is important for a fast calibration.

By following this tutorial, you’ll be well-prepared to execute precise sensor calibration for your robotics or autonomous system project.

About William Sitch:

William Sitch is an engineer, technical speaker, and leads customer-facing activities as the Chief Business Officer at Main Street Autonomy. He would love to learn about your autonomous robots and any technical challenges you’re struggling with. William is an aspiring athlete who raced in the Ironman World Championships in Nice, France in 2023.